1、[CV] Scaling Vision Transformers to 22 Billion Parameters

2、[LG] Project and Probe: Sample-Efficient Domain Adaptation by Interpolating Orthogonal Features

3、[RO] Controllability-Aware Unsupervised Skill Discovery

4、[CL] The Wisdom of Hindsight Makes Language Models Better Instruction Followers

5、[CL] Binarized Neural Machine Translation

[LG] Read and Reap the Rewards: Learning to Play Atari with the Help of Instruction Manuals

[CV] Weakly-supervised Representation Learning for Video Alignment and Analysis

[LG] Diagnosing and Rectifying Vision Models using Language

[CV] Invariant Slot Attention: Object Discovery with Slot-Centric Reference Frames

摘要:将视觉Transformer扩展到22B参数、基于正交特征插值的样本高效域自适应、可控性感知无监督技能发现、明智的事后标记让语言模型成为更好的指令执行器、二值化神经机器翻译、在指导手册的帮助下学习玩雅达利游戏、面向视频对齐与分析的弱监督表示学习、用语言诊断和纠正视觉模型、基于槽中心参考框架的目标发现

1、[CV] Scaling Vision Transformers to 22 Billion Parameters

M Dehghani, J Djolonga, B Mustafa, P Padlewski, J Heek, J Gilmer, A Steiner…

[Google Research]

将视觉Transformer扩展到22B参数

要点:

-

提出一种高效、稳定地训练 22B 参数 ViT 的方法,也是迄今为止最大的稠密 ViT 模型; -

产生的模型显示了随着规模扩大而不断提高的性能,并在几个基准上取得了最先进的结果; -

当涉及到形状和纹理偏差时,ViT-22B 与人类感知更一致,并在公平性和鲁棒性方面显示出收益; -

该模型可作为蒸馏目标训练一个较小的学生模型,在该规模上达到最先进的性能。

一句话总结:

提出一种高效稳定地训练 22B 参数 ViT(ViT-22B)模型的方法,也是迄今为止最大的稠密 ViT 模型。产生的模型在各种下游任务上进行了评估,显示出随规模扩大,性能也在不断提高,在公平性和性能之间的权衡,与人类视觉感知的一致性,以及鲁棒性。

The scaling of Transformers has driven breakthrough capabilities for language models. At present, the largest large language models (LLMs) contain upwards of 100B parameters. Vision Transformers (ViT) have introduced the same architecture to image and video modelling, but these have not yet been successfully scaled to nearly the same degree; the largest dense ViT contains 4B parameters (Chen et al., 2022). We present a recipe for highly efficient and stable training of a 22B-parameter ViT (ViT-22B) and perform a wide variety of experiments on the resulting model. When evaluated on downstream tasks (often with a lightweight linear model on frozen features), ViT-22B demonstrates increasing performance with scale. We further observe other interesting benefits of scale, including an improved tradeoff between fairness and performance, state-of-the-art alignment to human visual perception in terms of shape/texture bias, and improved robustness. ViT-22B demonstrates the potential for “LLM-like” scaling in vision, and provides key steps towards getting there.

https://arxiv.org/abs/2302.05442

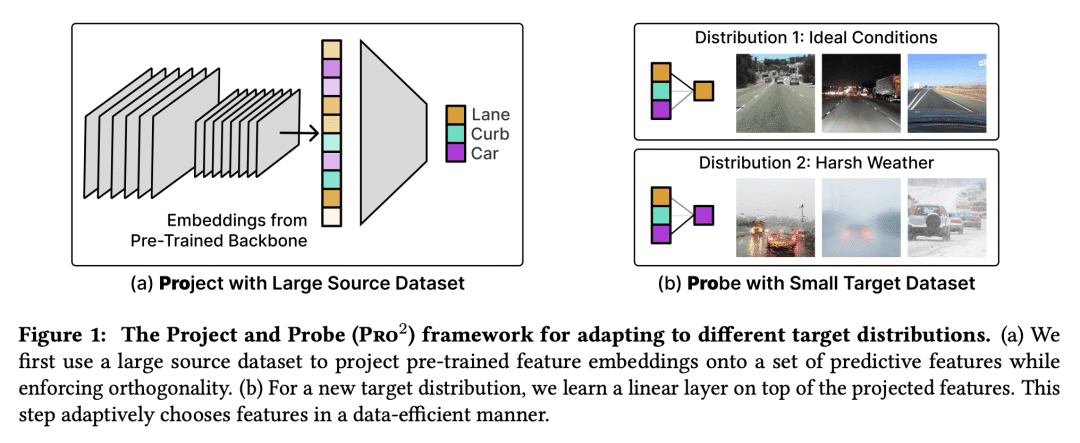

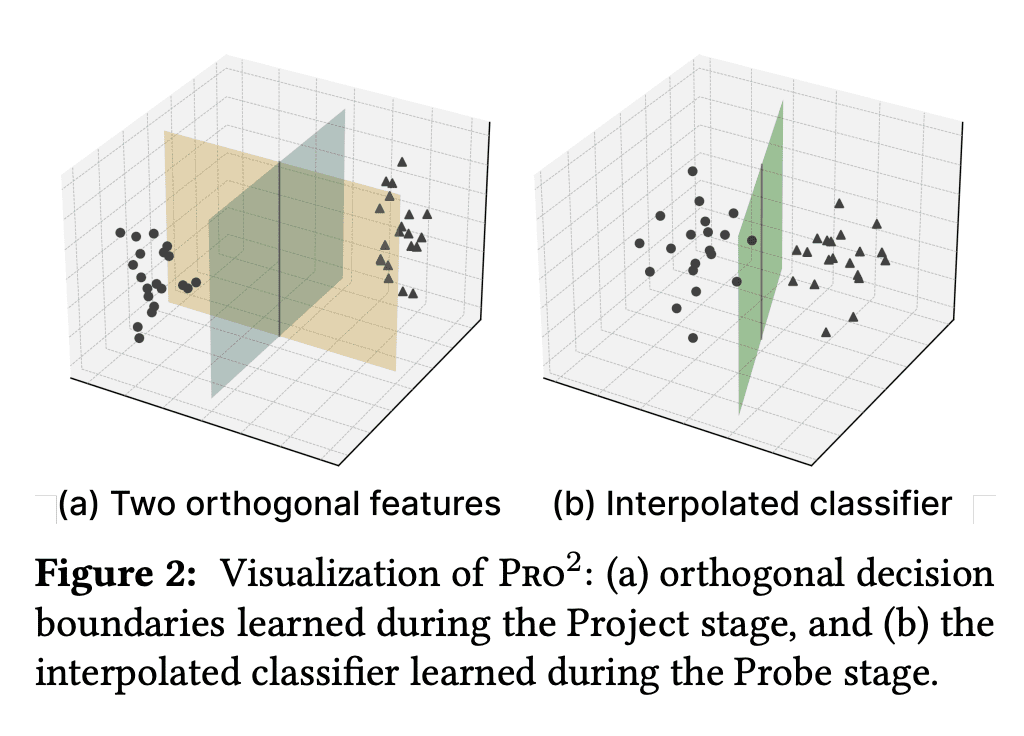

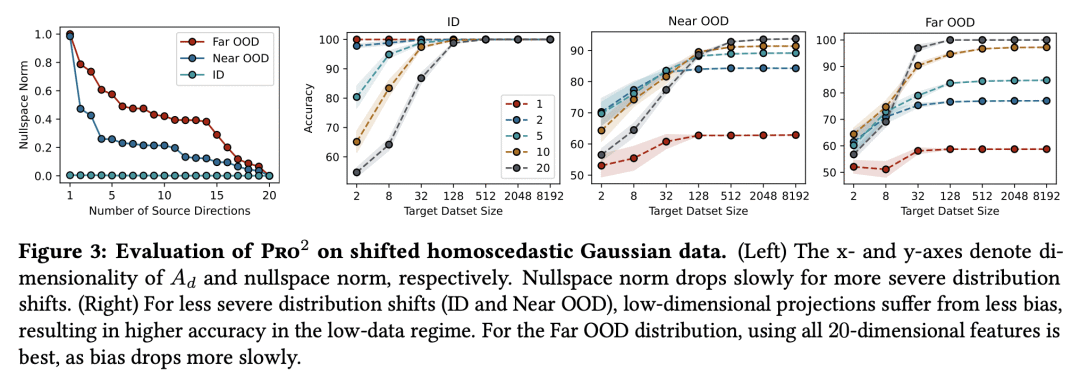

2、[LG] Project and Probe: Sample-Efficient Domain Adaptation by Interpolating Orthogonal Features

A S. Chen, Y Lee, A Setlur, S Levine, C Finn

[Stanford University & CMU & UC Berkeley]

Project and Probe: 基于正交特征插值的样本高效域自适应

要点:

-

Pro² 是一种轻量且样本高效的域自适应方法,它学习一组多样化特征,通过将这些特征与一个小的目标数据集进行插值来自适应目标分布; -

Pro² 学习一个线性投影,将预训练的嵌入映射到正交方向,同时对源数据集中的标签进行预测。这一步旨在学习各种预测性特征,以便在分布漂移后至少有一些特征仍然有用; -

Pro² 用一个小型目标数据集在这些预测特征的基础上学习一个线性分类器。理论和经验分析表明,Pro² 学习的投影矩阵在信息论意义上是最佳的分类,由于有利的偏差-方差权衡,带来了更好的泛化; -

在四个数据集上的实验表明,与之前的方法(如标准线性探测)相比,在给定有限的目标数据时,Pro² 的性能提高了5-15%。

一句话总结:

提出一种名为 Project and Probe(Pro²)的样本高效域自适应方法,通过用小目标数据集插值这些特征,来学习一组多样化特征并自适应目标分布。Pro² 首先学习线性投影,将预训练的嵌入映射到正交方向,同时预测源数据集中的标签,使用小型目标数据集在这些投影特征上学习线性分类器。理论和实证分析表明,Pro² 在样本效率和泛化方面优于先前的方法。

Conventional approaches to robustness try to learn a model based on causal features. However, identifying maximally robust or causal features may be difficult in some scenarios, and in others, non-causal “shortcut” features may actually be more predictive. We propose a lightweight, sample-efficient approach that learns a diverse set of features and adapts to a target distribution by interpolating these features with a small target dataset. Our approach, Project and Probe (Pro2), first learns a linear projection that maps a pre-trained embedding onto orthogonal directions while being predictive of labels in the source dataset. The goal of this step is to learn a variety of predictive features, so that at least some of them remain useful after distribution shift. Pro2 then learns a linear classifier on top of these projected features using a small target dataset. We theoretically show that Pro2 learns a projection matrix that is optimal for classification in an information-theoretic sense, resulting in better generalization due to a favorable bias-variance tradeoff. Our experiments on four datasets, with multiple distribution shift settings for each, show that Pro2 improves performance by 5-15% when given limited target data compared to prior methods such as standard linear probing.

https://arxiv.org/abs/2302.05441

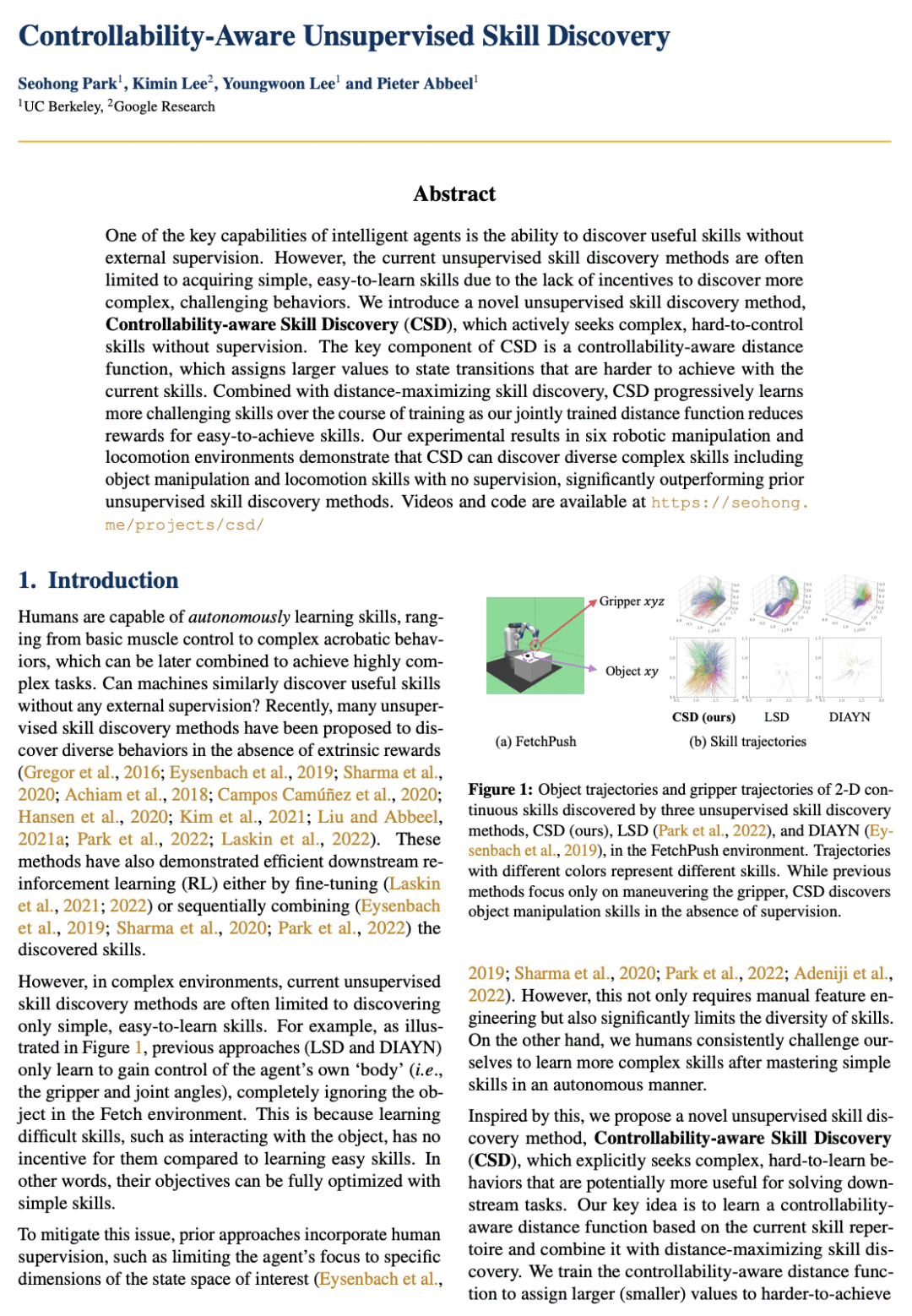

3、[RO] Controllability-Aware Unsupervised Skill Discovery

S Park, K Lee, Y Lee, P Abbeel

[UC Berkeley & Google Research]

可控性感知无监督技能发现

要点:

-

由于缺乏激励,目前的无监督技能发现方法在获取复杂行为方面受到限制; -

CSD 是一种新的无监督技能发现方法,在没有监督的情况下寻求难以控制的技能; -

可控性意识的距离函数鼓励智能体发现更复杂、难以实现的技能; -

实证结果表明,在不同的机器人操纵和运动环境中,CSD 优于之前最先进的技能发现方法。

一句话总结:

提出一种新的无监督技能发现方法——可控性感知技能发现(CSD),在没有监督的情况下主动寻找复杂的、难以控制的技能。CSD 的关键部分是一个可控性感知距离函数,为那些用当前技能难以实现的状态转换分配较大的值。

One of the key capabilities of intelligent agents is the ability to discover useful skills without external supervision. However, the current unsupervised skill discovery methods are often limited to acquiring simple, easy-to-learn skills due to the lack of incentives to discover more complex, challenging behaviors. We introduce a novel unsupervised skill discovery method, Controllability-aware Skill Discovery (CSD), which actively seeks complex, hard-to-control skills without supervision. The key component of CSD is a controllability-aware distance function, which assigns larger values to state transitions that are harder to achieve with the current skills. Combined with distance-maximizing skill discovery, CSD progressively learns more challenging skills over the course of training as our jointly trained distance function reduces rewards for easy-to-achieve skills. Our experimental results in six robotic manipulation and locomotion environments demonstrate that CSD can discover diverse complex skills including object manipulation and locomotion skills with no supervision, significantly outperforming prior unsupervised skill discovery methods. Videos and code are available at this https URL

https://arxiv.org/abs/2302.05103

4、[CL] The Wisdom of Hindsight Makes Language Models Better Instruction Followers

T Zhang, F Liu, J Wong, P Abbeel, J E. Gonzalez

[UC Berkeley]

明智的事后标记让语言模型成为更好的指令执行器

要点:

-

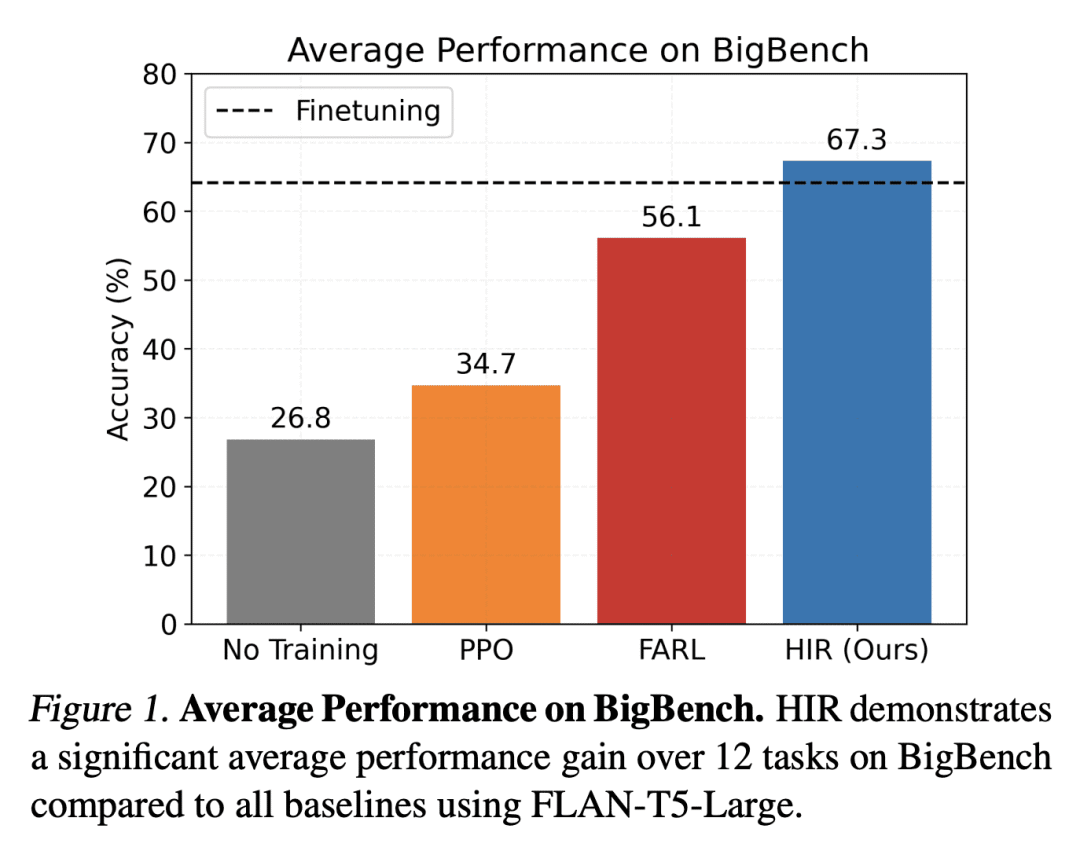

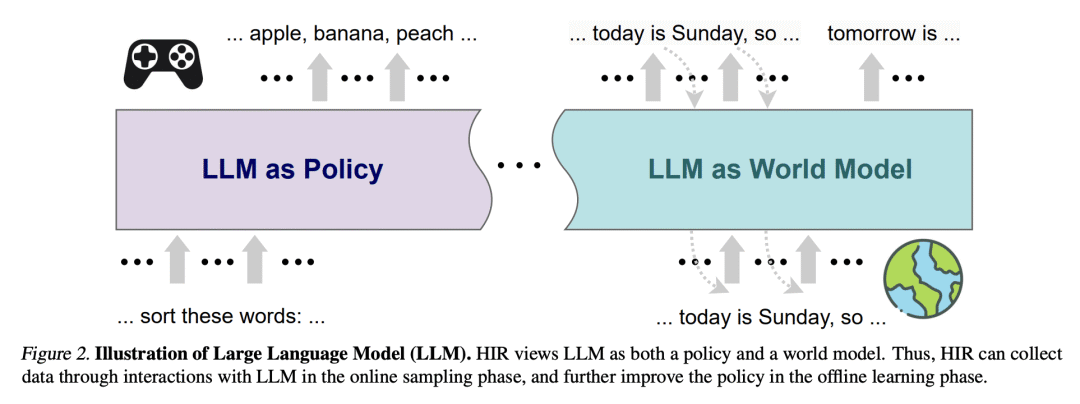

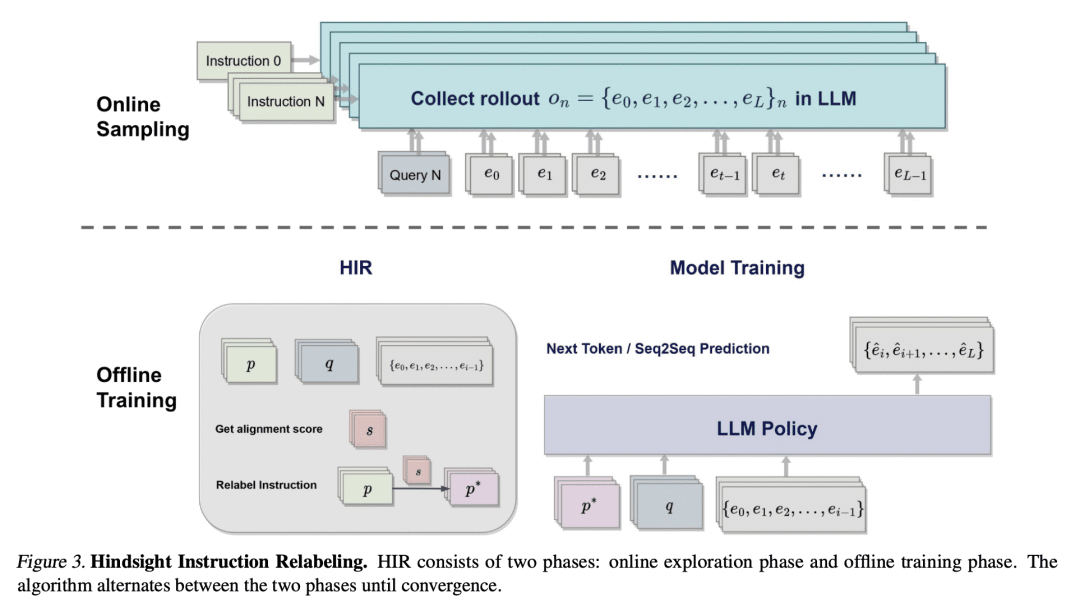

HIR 是一种新算法,将指令对齐和目标条件强化学习联系起来,产生了一种简单的两阶段事后重标记算法; -

HIR 利用成功数据和失败数据来有效地训练语言模型,不需要任何额外的训练管道,使其更具加数据高效; -

在12个具有挑战性的 BigBench 推理任务上,HIR 明显优于基线算法,与有监督微调(SFT)相当; -

HIR 为通过事后指示重标记从反馈中学习提供了一种新视角,可启发未来研究,即设计更高效和可扩展的算法通过人工反馈训练语言模型。

一句话总结:

提出了一种新算法——事后指令重标记(HIR),改善了语言模型与指令的一致性。HIR 在 BigBench 推理任务上取得了令人印象深刻的结果,是第一个将事后重标记应用于语言模型的算法。

Reinforcement learning has seen wide success in finetuning large language models to better align with instructions via human feedback. The so-called algorithm, Reinforcement Learning with Human Feedback (RLHF) demonstrates impressive performance on the GPT series models. However, the underlying Reinforcement Learning (RL) algorithm is complex and requires an additional training pipeline for reward and value networks. In this paper, we consider an alternative approach: converting feedback to instruction by relabeling the original one and training the model for better alignment in a supervised manner. Such an algorithm doesn’t require any additional parameters except for the original language model and maximally reuses the pretraining pipeline. To achieve this, we formulate instruction alignment problem for language models as a goal-reaching problem in decision making. We propose Hindsight Instruction Relabeling (HIR), a novel algorithm for aligning language models with instructions. The resulting two-stage algorithm shed light to a family of reward-free approaches that utilize the hindsightly relabeled instructions based on feedback. We evaluate the performance of HIR extensively on 12 challenging BigBench reasoning tasks and show that HIR outperforms the baseline algorithms and is comparable to or even surpasses supervised finetuning.

https://arxiv.org/abs/2302.05206

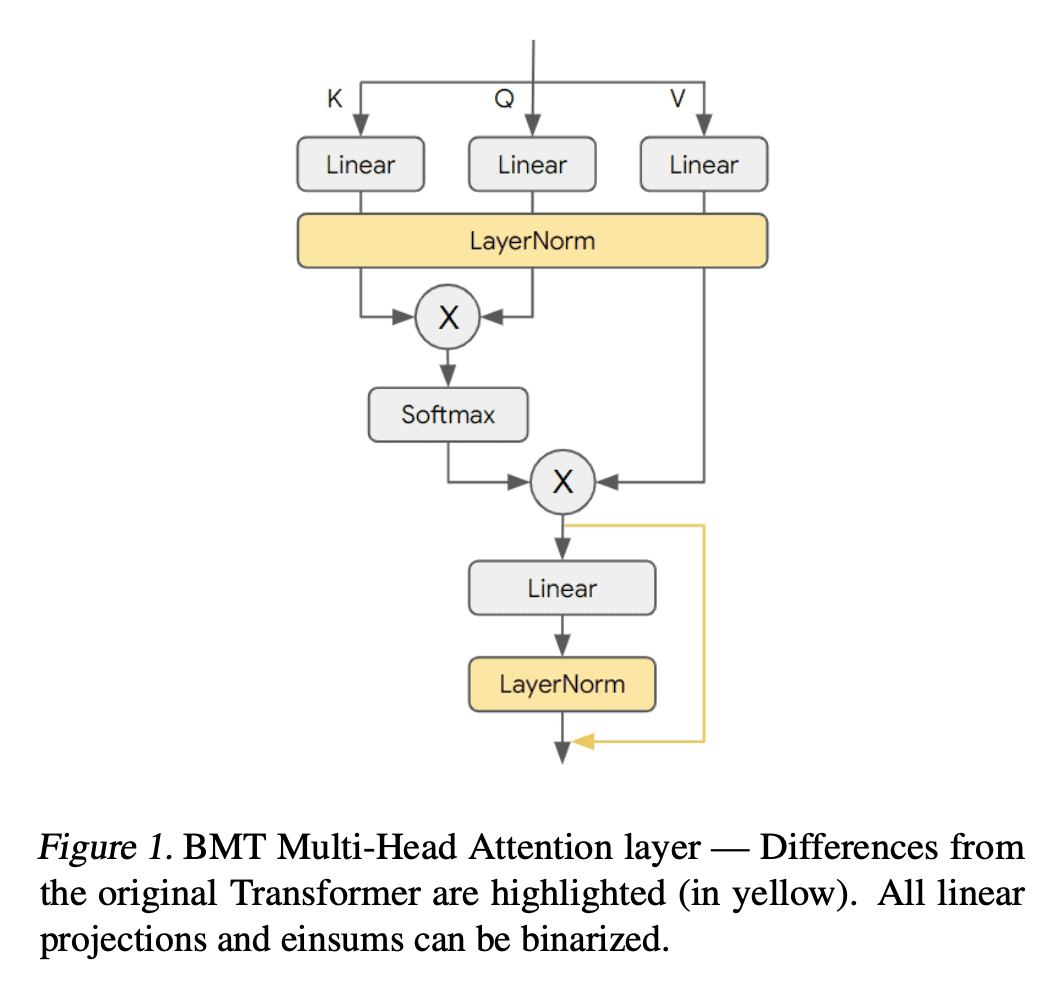

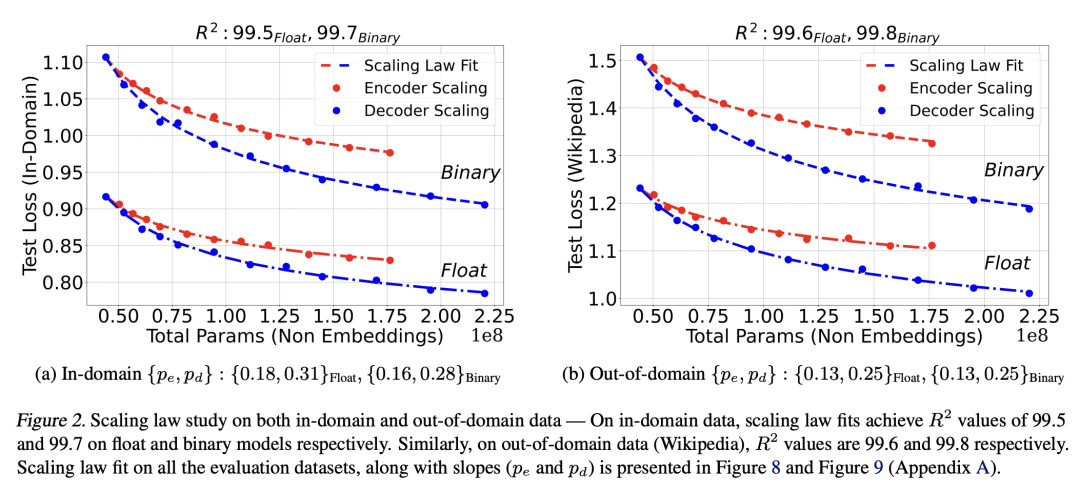

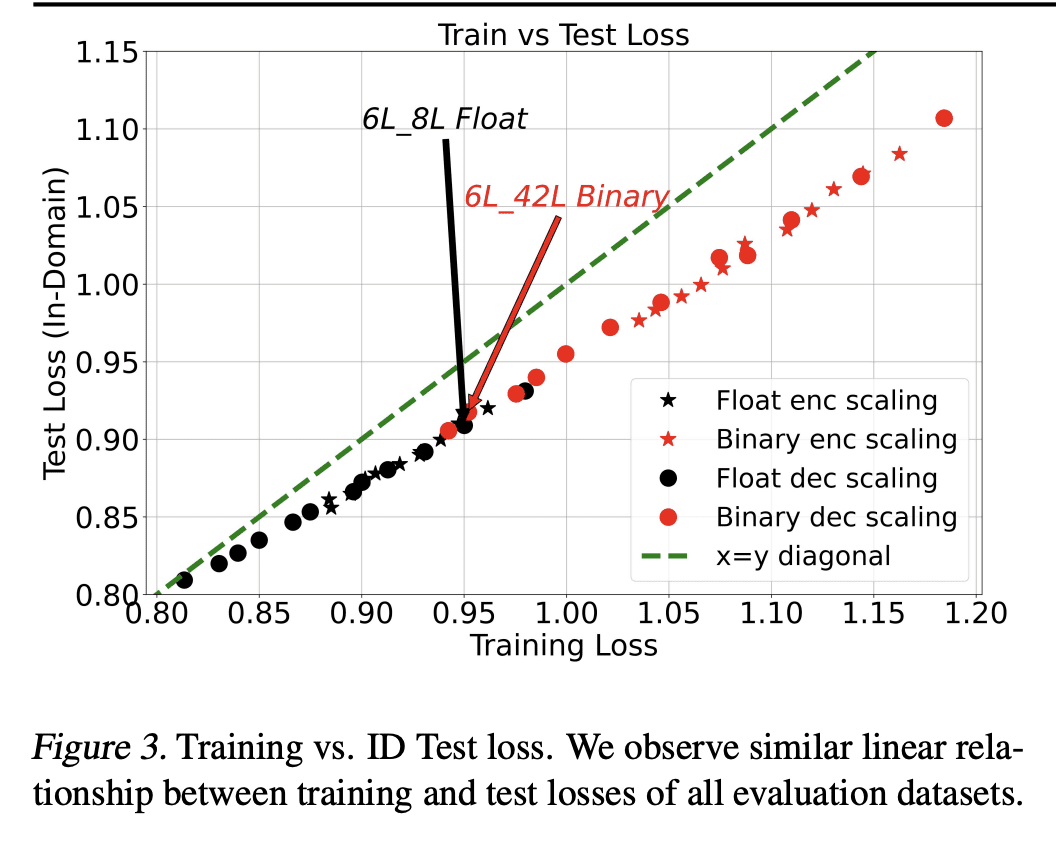

5、[CL] Binarized Neural Machine Translation

Y Zhang, A Garg, Y Cao, Ł Lew, B Ghorbani, Z Zhang, O Firat

[Cornell University & Google Research]

二值化神经机器翻译

要点:

-

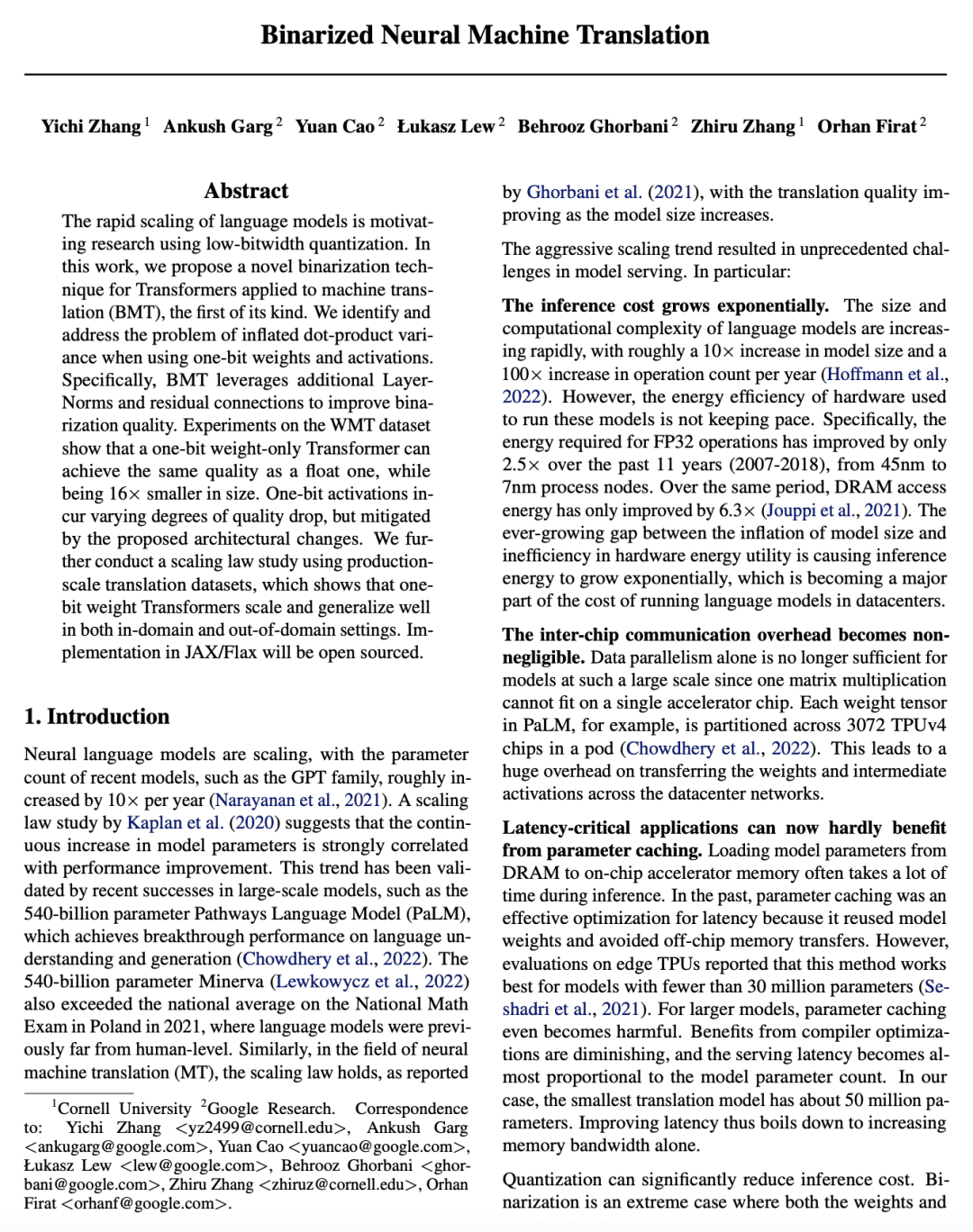

BMT 是机器翻译 Transformer 的一种新的二值化技术; -

BMT 通过利用额外的 LayerNorm 和残差连接来提高二值化质量,解决了使用 1bit 权重和激活时点积方差膨胀的问题; -

BMT 实现了与浮点模型相同的质量,同时模型大小比只用 1bit 权重的 Transformer 小16倍,但 1bit 激活会产生不同程度的质量下降; -

BMT在域内和域外的设置中,都有很好的扩展性和泛化性,二值化可以成为未来机器翻译模型服务的潜在替代技术。

一句话总结:

提出一种新的机器翻译中 Transformer 的二值化技术BMT,解决了使用 1bit 权重和激活时点积方差膨胀的问题,利用额外的 LayerNorm 和残差连接提高二值化质量,可以实现机器翻译的二值化,在保持翻译质量的同时减少模型的大小。

The rapid scaling of language models is motivating research using low-bitwidth quantization. In this work, we propose a novel binarization technique for Transformers applied to machine translation (BMT), the first of its kind. We identify and address the problem of inflated dot-product variance when using one-bit weights and activations. Specifically, BMT leverages additional LayerNorms and residual connections to improve binarization quality. Experiments on the WMT dataset show that a one-bit weight-only Transformer can achieve the same quality as a float one, while being 16x smaller in size. One-bit activations incur varying degrees of quality drop, but mitigated by the proposed architectural changes. We further conduct a scaling law study using production-scale translation datasets, which shows that one-bit weight Transformers scale and generalize well in both in-domain and out-of-domain settings. Implementation in JAX/Flax will be open sourced.

https://arxiv.org/abs/2302.04907

另外几篇值得关注的论文:

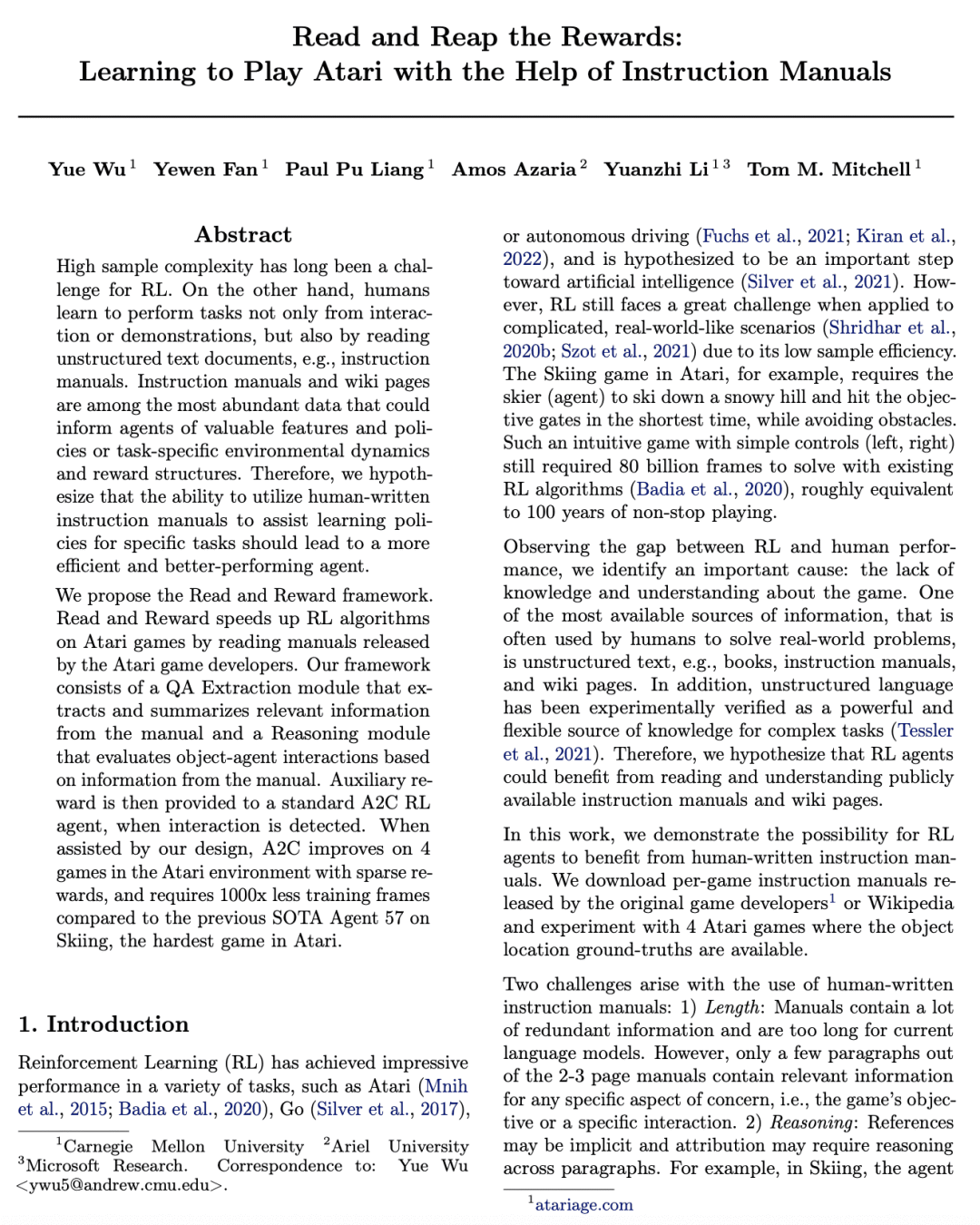

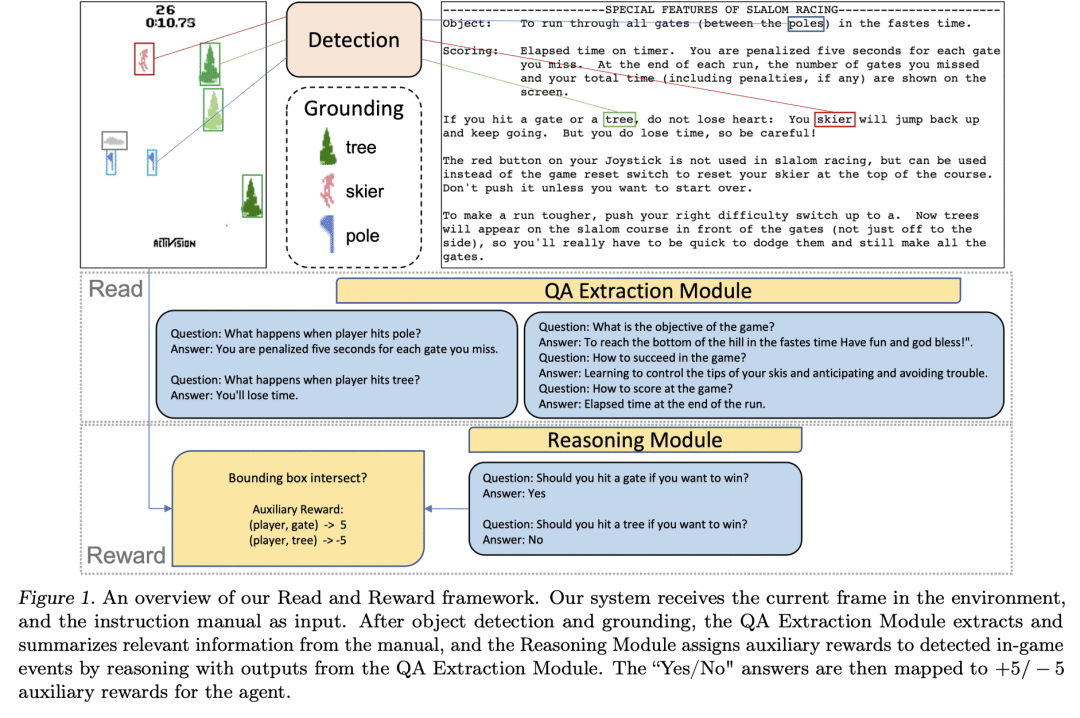

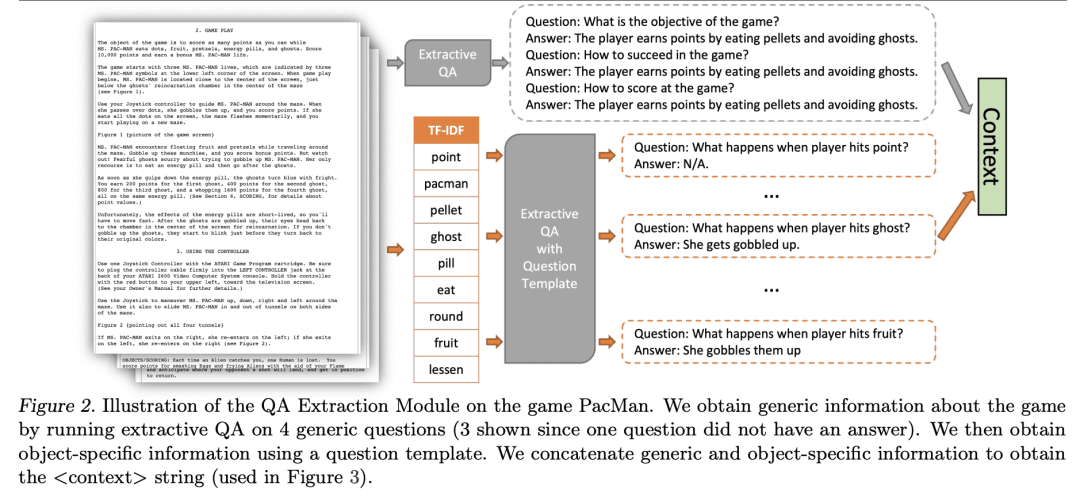

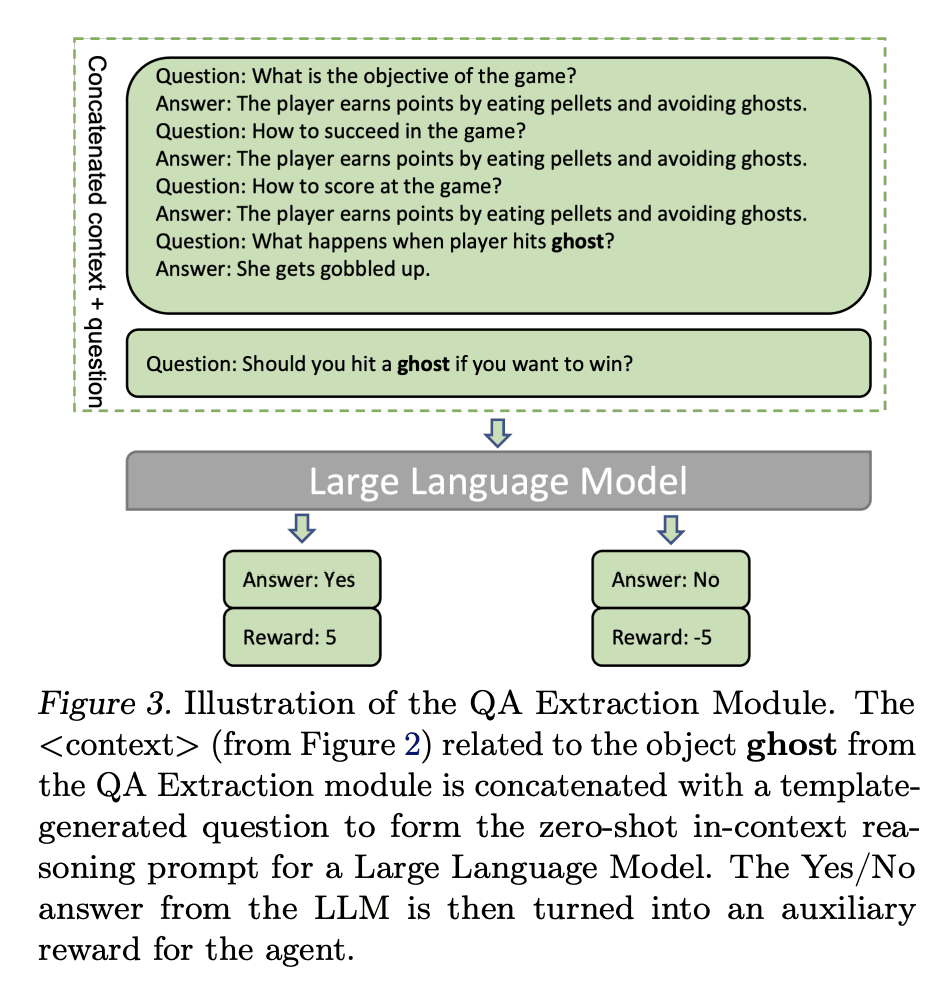

[LG] Read and Reap the Rewards: Learning to Play Atari with the Help of Instruction Manuals

Y Wu, Y Fan, P P Liang, A Azaria, Y Li, T M. Mitchell

[CMU]

阅读并得出奖励:在指导手册的帮助下学习玩雅达利游戏

要点:

-

长期以来,样本高复杂度一直是强化学习的一大挑战,但人可以从非结构化文本文档学习,比如说明书,其内容丰富且信息量大; -

所提出的”阅读与奖励”框架利用人工编写的说明手册来加快雅达利游戏的强化学习算法的速度; -

框架包括一个从手册中提取相关信息的QA提取模块,以及一个为游戏中事件分配辅助奖励的推理模块; -

A2C,一个标准的强化学习代理,在4个没有即时奖励的 Atari 游戏中得到了改善,与之前的SOTA智能体57相比,在最难的滑雪游戏中需要的训练帧数减少了1000倍。

一句话总结:

提出”阅读和奖励”的框架,通过利用人工编写的说明手册来加快 Atari 游戏的强化学习算法,框架由一个QA提取模块和一个推理模块组成,分别从手册中提取相关信息并为游戏中的事件分配辅助奖励。

High sample complexity has long been a challenge for RL. On the other hand, humans learn to perform tasks not only from interaction or demonstrations, but also by reading unstructured text documents, e.g., instruction manuals. Instruction manuals and wiki pages are among the most abundant data that could inform agents of valuable features and policies or task-specific environmental dynamics and reward structures. Therefore, we hypothesize that the ability to utilize human-written instruction manuals to assist learning policies for specific tasks should lead to a more efficient and better-performing agent.We propose the Read and Reward framework. Read and Reward speeds up RL algorithms on Atari games by reading manuals released by the Atari game developers. Our framework consists of a QA Extraction module that extracts and summarizes relevant information from the manual and a Reasoning module that evaluates object-agent interactions based on information from the manual. Auxiliary reward is then provided to a standard A2C RL agent, when interaction is detected. When assisted by our design, A2C improves on 4 games in the Atari environment with sparse rewards, and requires 1000x less training frames compared to the previous SOTA Agent 57 on Skiing, the hardest game in Atari.

https://arxiv.org/abs/2302.04449

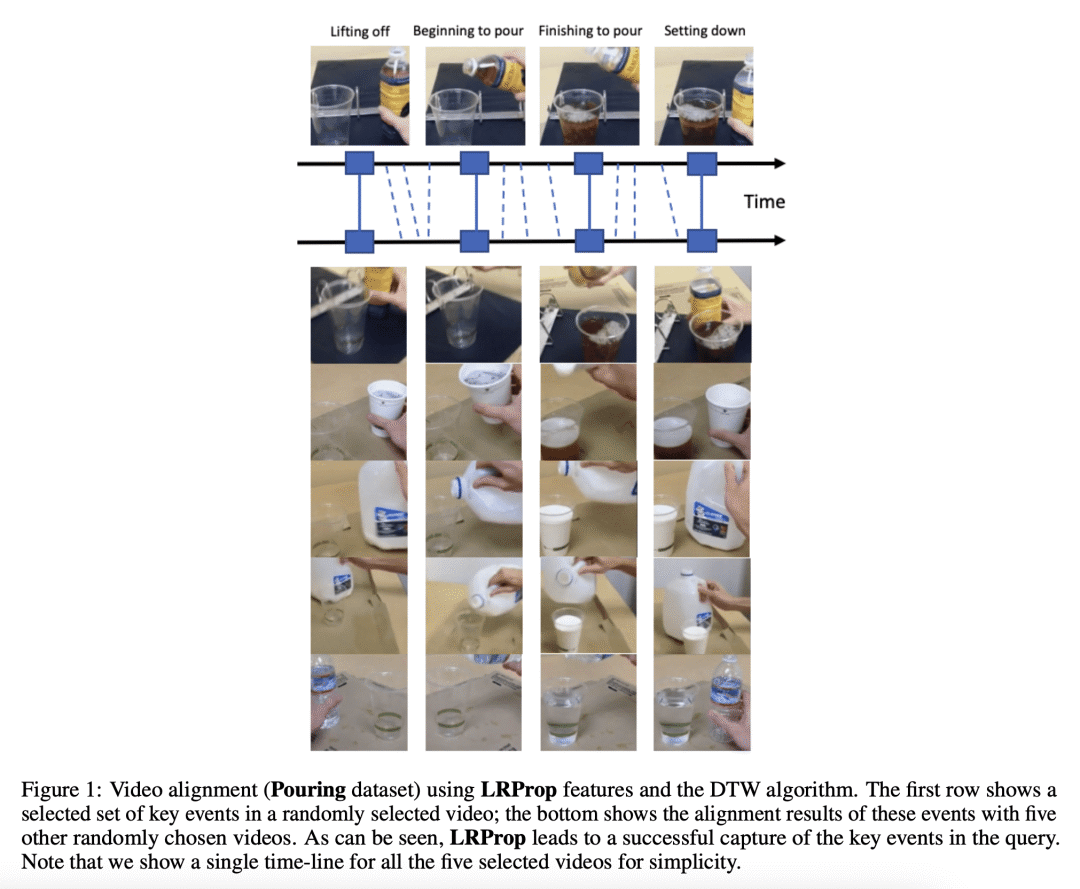

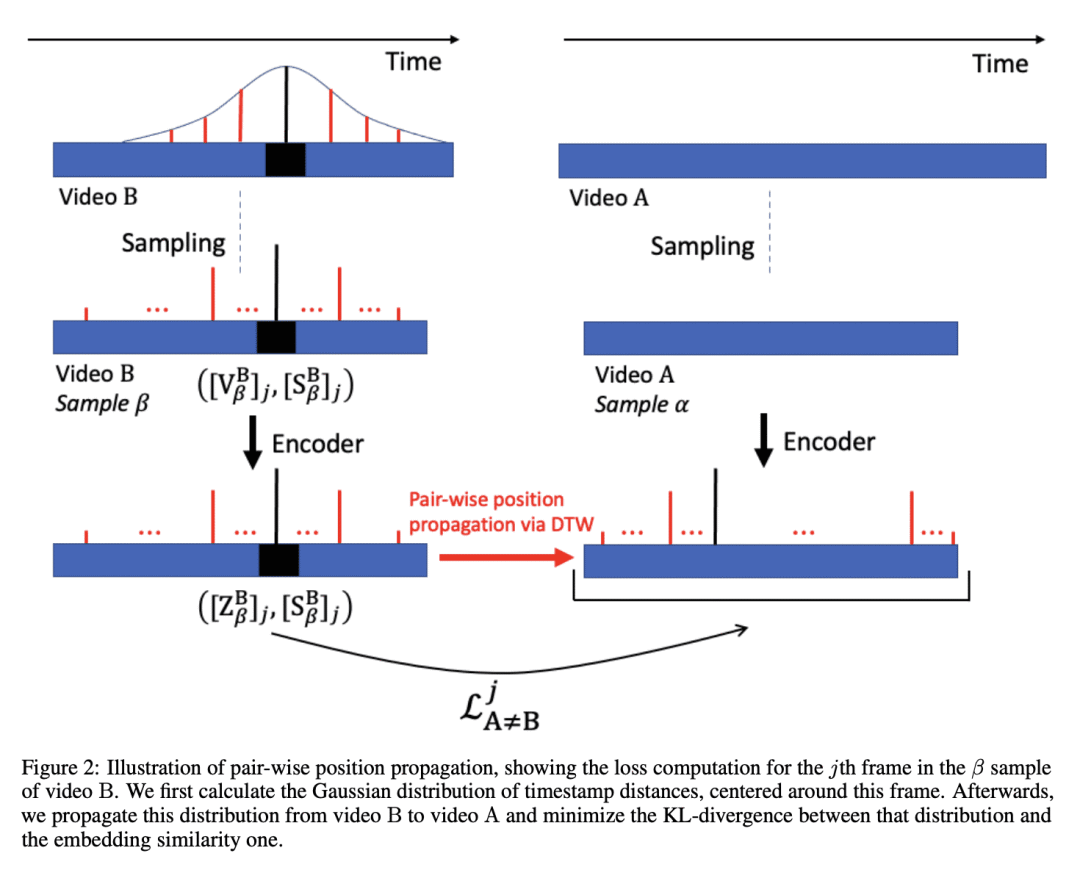

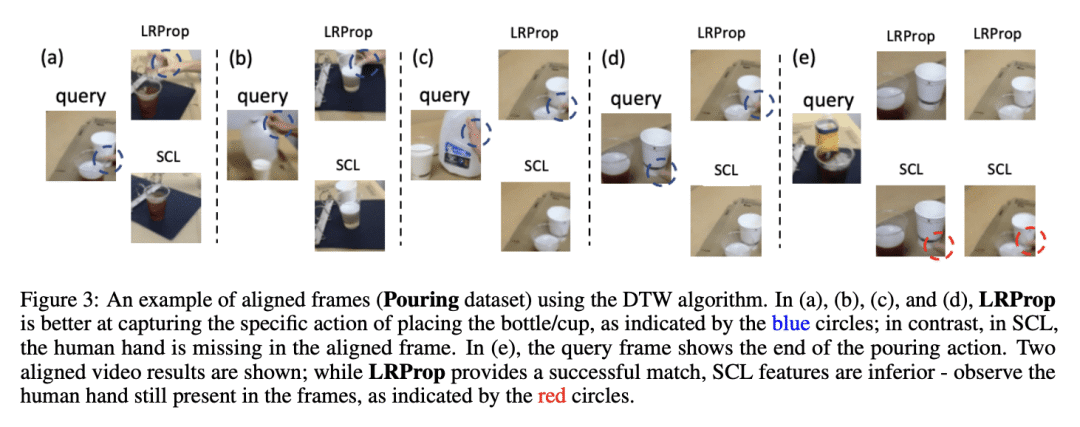

[CV] Weakly-supervised Representation Learning for Video Alignment and Analysis

G Bar-Shalom, G Leifman, M Elad, E Rivlin

[Verily Research]

面向视频对齐与分析的弱监督表示学习

要点:

-

LRProp 是一种用于视频分析的弱监督表征学习方法,用一个 Transformer 编码器和 DTW 算法在同一动作类的成对视频间进行时间对齐; -

所提出的”成对位置传播”方法将来自不同视频的帧之间的对应概率分布与帧级特征的相似性相匹配,与之前的工作相比,产生了更好的时间感知; -

LRProp 在时间对齐任务上总是优于现有最先进水平,并为下游视频分析任务设立了新的性能基准。

一句话总结:

提出LRProp,一种用于视频分析的弱监督表示学习方法,使用 Transformer 编码器来提取帧级特征,并使用动态时间平移(DTW)算法在同一动作类的视频对之间进行时间对齐,所提出的算法使用”成对位置传播”来匹配来自不同视频的帧之间的对应概率分布与帧级特征的相似性。

Many tasks in video analysis and understanding boil down to the need for frame-based feature learning, aiming to encapsulate the relevant visual content so as to enable simpler and easier subsequent processing. While supervised strategies for this learning task can be envisioned, self and weakly-supervised alternatives are preferred due to the difficulties in getting labeled data. This paper introduces LRProp — a novel weakly-supervised representation learning approach, with an emphasis on the application of temporal alignment between pairs of videos of the same action category. The proposed approach uses a transformer encoder for extracting frame-level features, and employs the DTW algorithm within the training iterations in order to identify the alignment path between video pairs. Through a process referred to as “pair-wise position propagation”, the probability distributions of these correspondences per location are matched with the similarity of the frame-level features via KL-divergence minimization. The proposed algorithm uses also a regularized SoftDTW loss for better tuning the learned features. Our novel representation learning paradigm consistently outperforms the state of the art on temporal alignment tasks, establishing a new performance bar over several downstream video analysis applications.

https://arxiv.org/abs/2302.04064

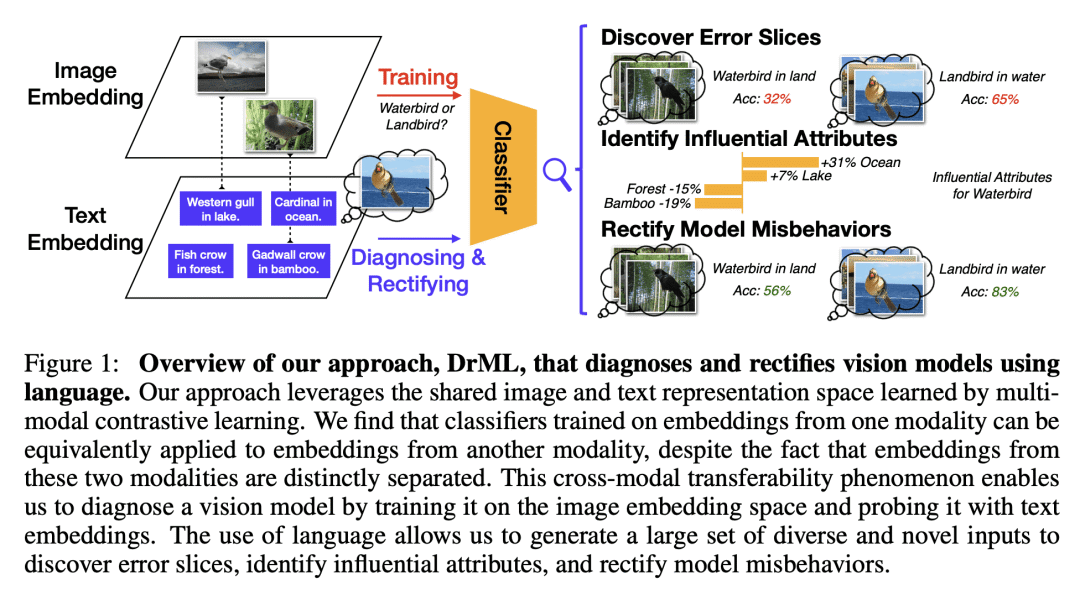

[LG] Diagnosing and Rectifying Vision Models using Language

Y Zhang, J Z. HaoChen, S Huang, K Wang, J Zou, S Yeung

[Stanford University]

用语言诊断和纠正视觉模型

要点:

-

多模态对比学习模型可以学习一个适合建立强大视觉分类器的嵌入空间,该空间可用来用自然语言输入诊断和纠正视觉分类器; -

提出的DrML框架可以发现高误差数据片断,识别有影响力的属性,并用语言输入纠正不理想的模型行为,而不需要视觉数据; -

提出一种理论解释和经验验证,即根据嵌入训练的分类器从一种模态到另一种模态的跨模态转移性,使得文本输入可以作为图像输入的良好代理; -

在代表三种最常见的模型失败模式的三个图像数据集上,DrML 被证明能有效地识别错误片断和有影响的属性,并使用语言来纠正模型失败模式。

一句话总结:

提出 DrML 框架,用于用自然语言诊断和纠正视觉模型,利用多模态对比学习模型来学习适合建立强大视觉分类器的嵌入空间,用语言来诊断高误差的数据片段,识别有影响力的属性,以及纠正不理想的模型行为,而不需要视觉数据。

Recent multi-modal contrastive learning models have demonstrated the ability to learn an embedding space suitable for building strong vision classifiers, by leveraging the rich information in large-scale image-caption datasets. Our work highlights a distinct advantage of this multi-modal embedding space: the ability to diagnose vision classifiers through natural language. The traditional process of diagnosing model behaviors in deployment settings involves labor-intensive data acquisition and annotation. Our proposed method can discover high-error data slices, identify influential attributes and further rectify undesirable model behaviors, without requiring any visual data. Through a combination of theoretical explanation and empirical verification, we present conditions under which classifiers trained on embeddings from one modality can be equivalently applied to embeddings from another modality. On a range of image datasets with known error slices, we demonstrate that our method can effectively identify the error slices and influential attributes, and can further use language to rectify failure modes of the classifier.

https://arxiv.org/abs/2302.04269

[CV] Invariant Slot Attention: Object Discovery with Slot-Centric Reference Frames

O Biza, S v Steenkiste, M S. M. Sajjadi, G F. Elsayed, A Mahendran, T Kipf

[Google Research & Northeastern University]

不变槽注意力:基于槽中心参考框架的目标发现

要点:

-

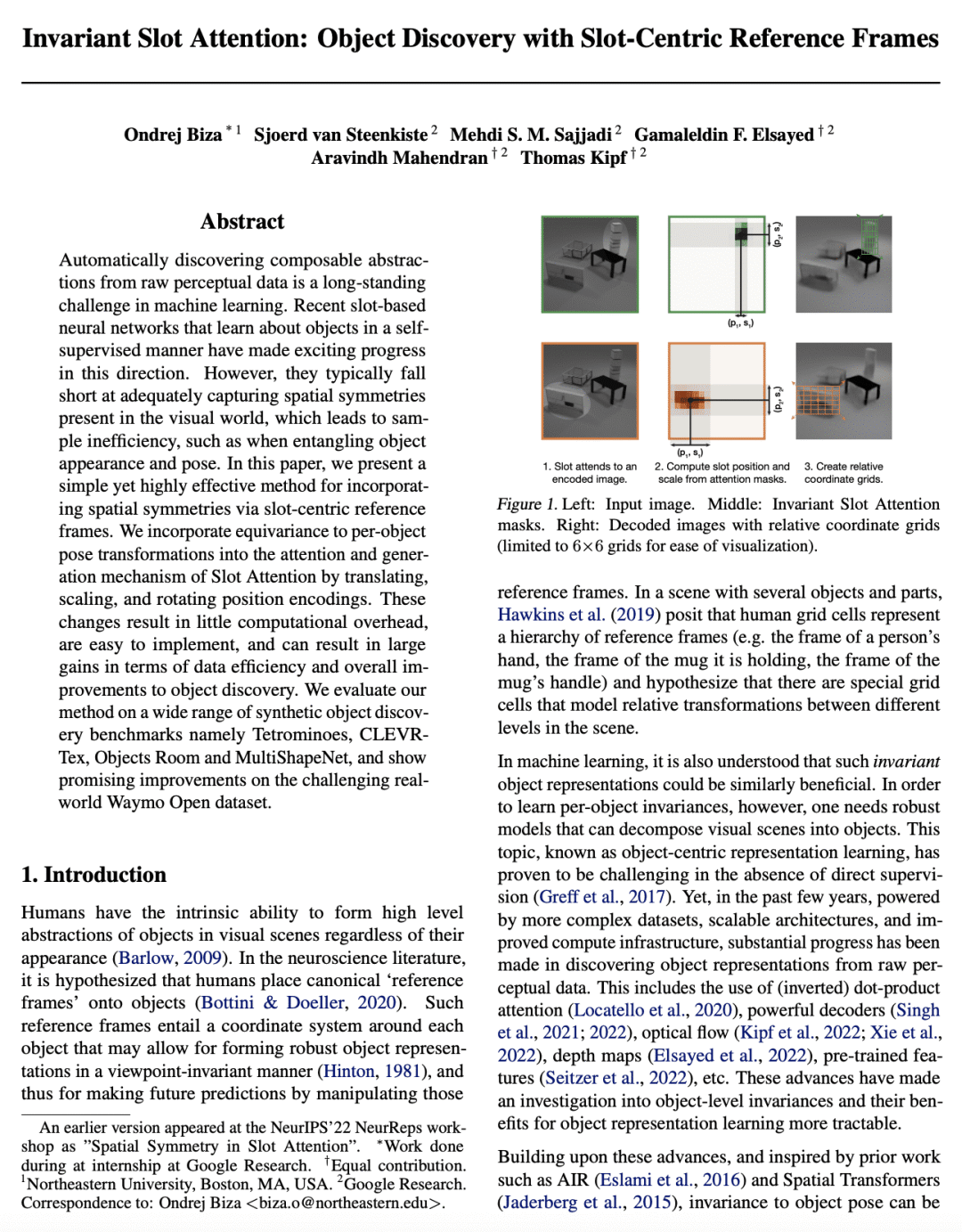

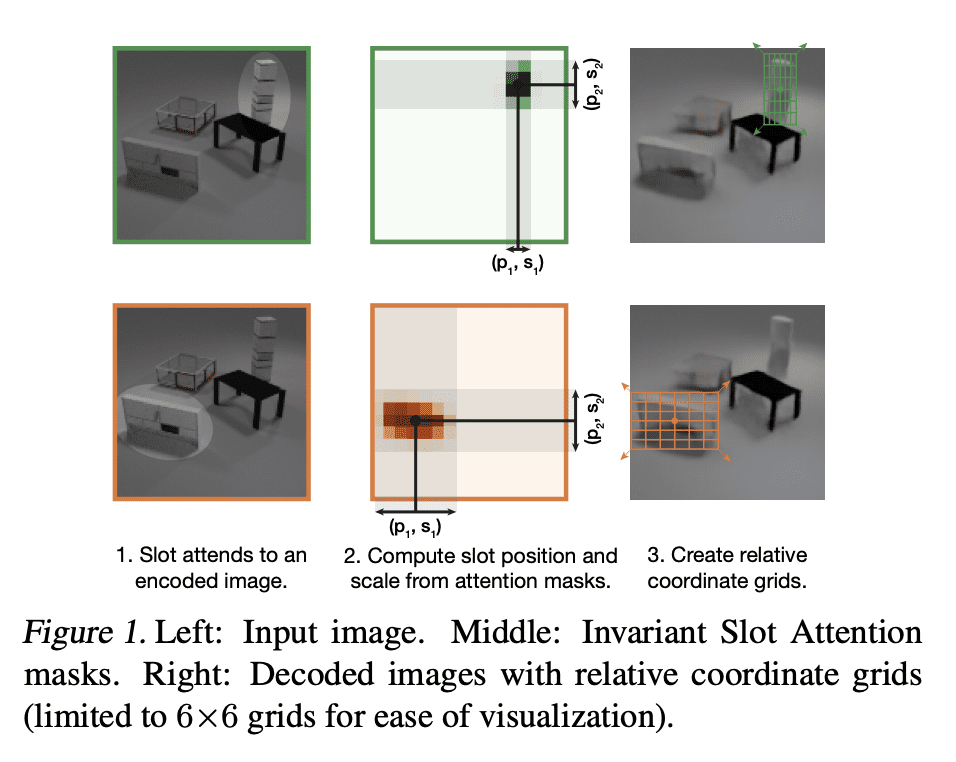

不变槽注意力(ISA)是一种用以槽为中心的参考框架进行无监督场景分解和目标发现的方法,能学习对几何变换(包括平移、缩放和旋转)不变的物体表示; -

ISA通过将各自的位置编码相对于每个物体的推断姿态进行转换,实现了对每个物体姿态转换的等变性; -

通过 ISA 纳入空间对称性,可以在数据效率方面获得巨大收益,并全面改善目标发现; -

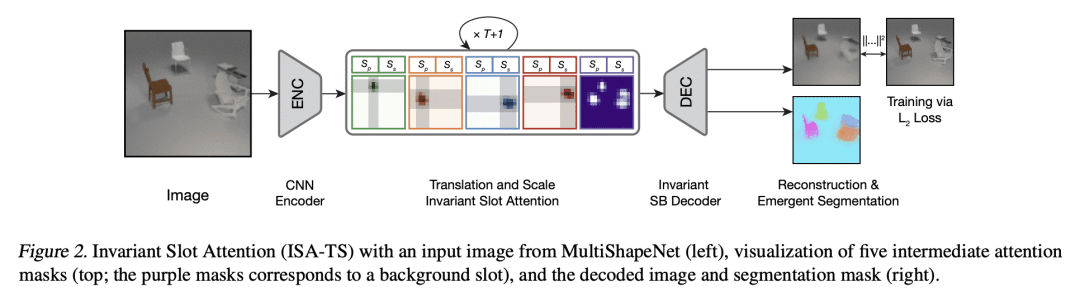

所提出的方法在各种合成和真实世界的数据集上进行了评估,并显示出有希望的结果。

一句话总结:

提出不变槽注意力(ISA),一种用以槽为中心的参考框架来捕捉视觉世界中的空间对称性的目标发现方法。所提出的方法通过平移、缩放和旋转位置编码实现了对每个物体姿态变换的等变性。通过 ISA 纳入空间对称性,可以在数据效率方面获得巨大收益,并全面改善目标发现。该方法在各种合成和真实世界的数据集上进行了评估。

Automatically discovering composable abstractions from raw perceptual data is a long-standing challenge in machine learning. Recent slot-based neural networks that learn about objects in a self-supervised manner have made exciting progress in this direction. However, they typically fall short at adequately capturing spatial symmetries present in the visual world, which leads to sample inefficiency, such as when entangling object appearance and pose. In this paper, we present a simple yet highly effective method for incorporating spatial symmetries via slot-centric reference frames. We incorporate equivariance to per-object pose transformations into the attention and generation mechanism of Slot Attention by translating, scaling, and rotating position encodings. These changes result in little computational overhead, are easy to implement, and can result in large gains in terms of data efficiency and overall improvements to object discovery. We evaluate our method on a wide range of synthetic object discovery benchmarks namely CLEVR, Tetrominoes, CLEVRTex, Objects Room and MultiShapeNet, and show promising improvements on the challenging real-world Waymo Open dataset.

https://arxiv.org/abs/2302.04973

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง