作者:X Ren, P Zhou, X Meng, X Huang, Y Wang, W Wang…

[Huawei]

总结:

PanGu-Σ是一个拥有1.085T参数的稀疏语言模型,在Ascend 910 AI处理器和MindSpore框架上训练,并在多项NLP任务中获得了最先进的性能。

要点:

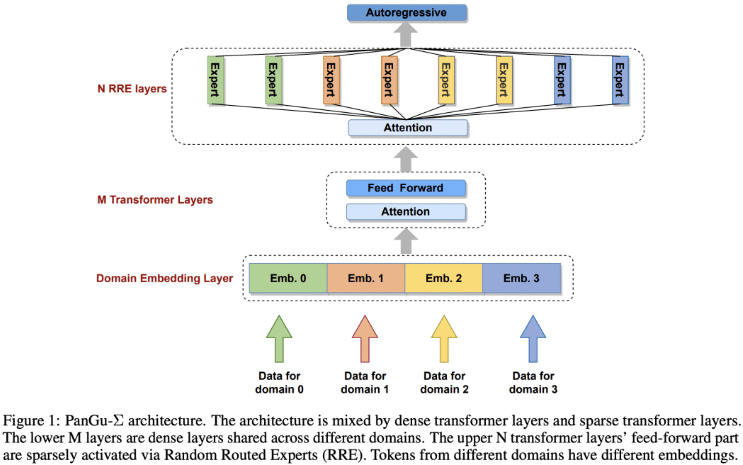

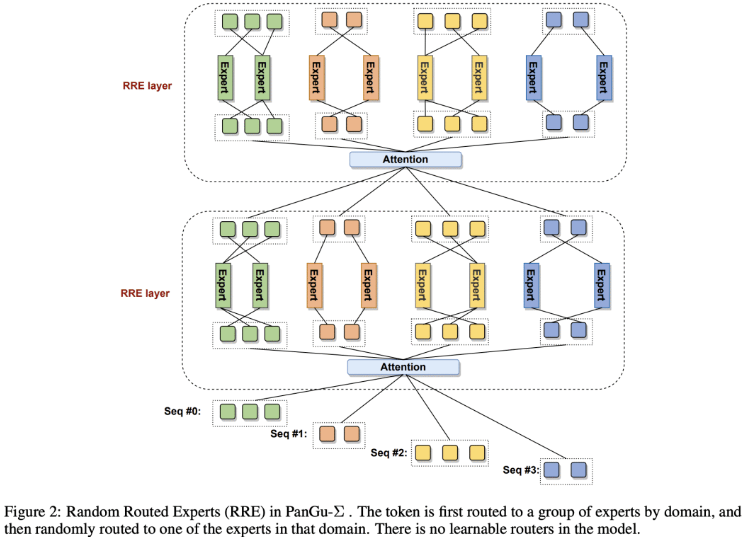

- PanGu-Σ 是一个拥有 1.085T 参数的语言模型,通过随机路由专家(RRE)和专家计算以及存储分离(ECSS)技术实现了高效稀疏化;

- PanGu-Σ 在多项 NLP 任务中获得了最先进的性能,包括零样本学习、对话、问答、机器翻译和代码生成;

- PanGu-Σ 的训练速度比同样参数的其他模型快了 6.3 倍,同时减少了主机到设备和设备到主机的通信;

- 在多种应用领域中微调后,PanGu-Σ 的性能超过了其他 SOTA 模型,如对话、机器翻译和代码生成。

https://arxiv.org/abs/2303.10845

The scaling of large language models has greatly improved natural language understanding, generation, and reasoning. In this work, we develop a system that trained a trillion-parameter language model on a cluster of Ascend 910 AI processors and MindSpore framework, and present the language model with 1.085T parameters named PanGu-{Sigma}. With parameter inherent from PanGu-{lpha}, we extend the dense Transformer model to sparse one with Random Routed Experts (RRE), and efficiently train the model over 329B tokens by using Expert Computation and Storage Separation(ECSS). This resulted in a 6.3x increase in training throughput through heterogeneous computing. Our experimental findings show that PanGu-{Sigma} provides state-of-the-art performance in zero-shot learning of various Chinese NLP downstream tasks. Moreover, it demonstrates strong abilities when fine-tuned in application data of open-domain dialogue, question answering, machine translation and code generation.

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง