作者:S Yin, C Wu, H Yang, J Wang, X Wang, M Ni, Z Yang…

[Microsoft Research Asia & University of Science and Technology of China]

总结:

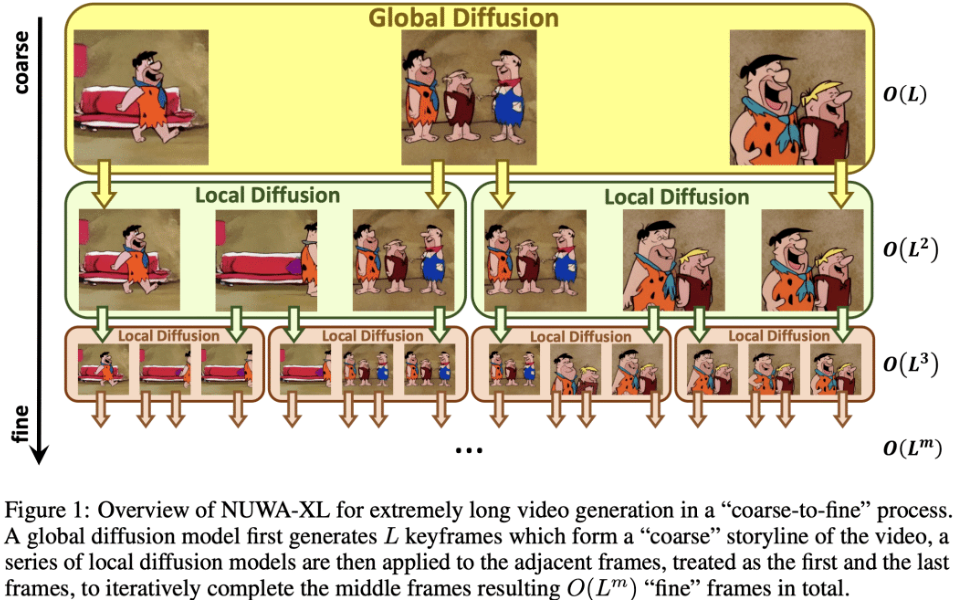

NUWA-XL是一种新的视频生成方法,采用“Diffusion over Diffusion”架构,以“从粗到细”的过程并行生成高度一致的长视频,能直接在长视频(3376帧)上进行训练,从而消除训练-推理差距。

要点:

- 提出NUWA-XL,采用“Diffusion over Diffusion”架构。

- NUWA-XL 是第一个直接在长视频(3376帧)上进行训练的模型,解决了长视频生成的训练-推理差距问题。

- NUWA-XL 支持并行推理,在生成1024帧视频时,推理速度提高了94.26%。

- 建立了FlintstonesHD数据集,以证明NUWA-XL的有效性,并为长视频生成提供了一个基准。

https://arxiv.org/abs/2303.12346

In this paper, we propose NUWA-XL, a novel Diffusion over Diffusion architecture for eXtremely Long video generation. Most current work generates long videos segment by segment sequentially, which normally leads to the gap between training on short videos and inferring long videos, and the sequential generation is inefficient. Instead, our approach adopts a “coarse-to-fine” process, in which the video can be generated in parallel at the same granularity. A global diffusion model is applied to generate the keyframes across the entire time range, and then local diffusion models recursively fill in the content between nearby frames. This simple yet effective strategy allows us to directly train on long videos (3376 frames) to reduce the training-inference gap, and makes it possible to generate all segments in parallel. To evaluate our model, we build FlintstonesHD dataset, a new benchmark for long video generation. Experiments show that our model not only generates high-quality long videos with both global and local coherence, but also decreases the average inference time from 7.55min to 26s (by 94.26%) at the same hardware setting when generating 1024 frames.

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง