今天是2023年11月21日,星期二,北京,天气晴。

我们来继续看看prompt的一些工作,以顺承前面ICL以及长文本上的一些知识。

很久之前,我们介绍到,prompt是影响下游任务的关键所在,当我们在应用chatgpt进行nlp任务落地时,如何选择合适的prompt,对于SFT以及推理环节尤为重要。

不过,硬想不是办法,我们可以充分参考开源的一些已有工作,幸运的是,这类工作已然存在。

因此,本文主要介绍longbench、LooGLE、pclue以及firefly自然语言处理任务prompt以及PromptSource英文常用评测任务prompt生成工具包。

一、其他一些关于NLP任务的代表prompt

最近我们在看长文本说的一些评估数据集,而对于评估来说,如何针对不同的任务,设定相应的prompt,最为重要。下面介绍longbench、LooGLE、pclue以及firefly自然语言处理任务prompt。

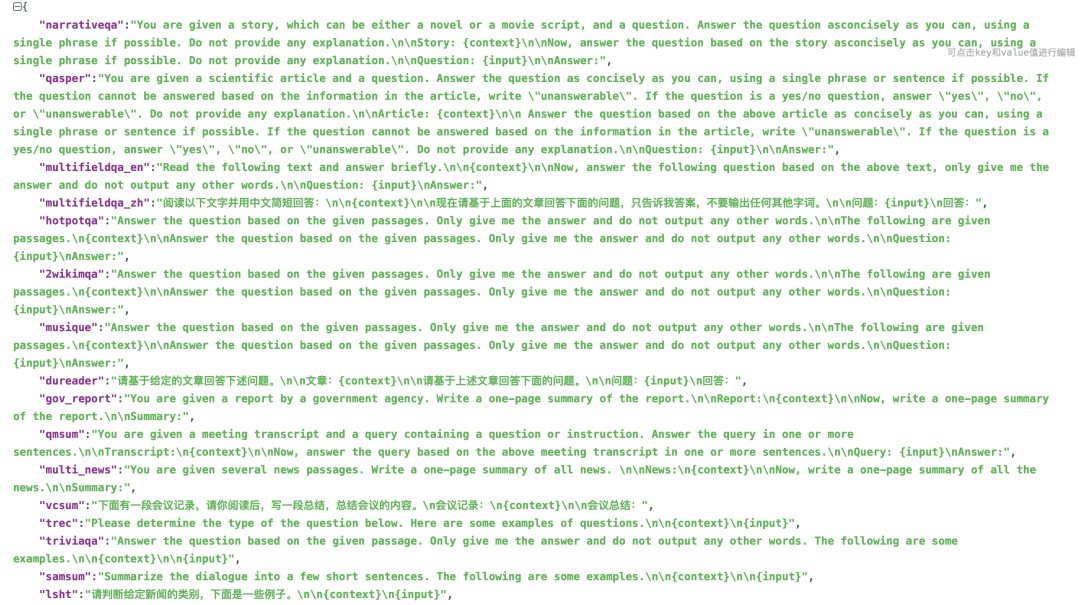

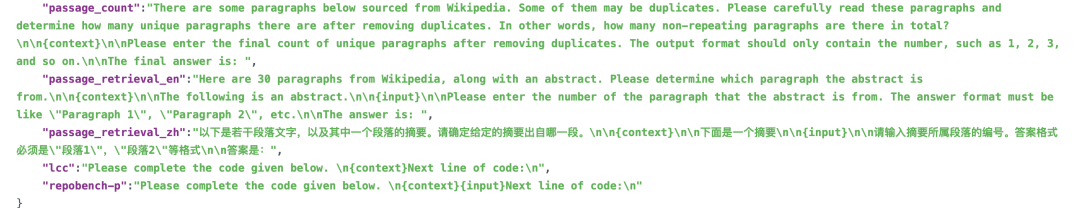

1、longbench长文本prompt

地址:https://github.com/THUDM/LongBench

2、LooGLE长文本评测prompt

地址:https://github.com/bigai-nlco/LooGLE

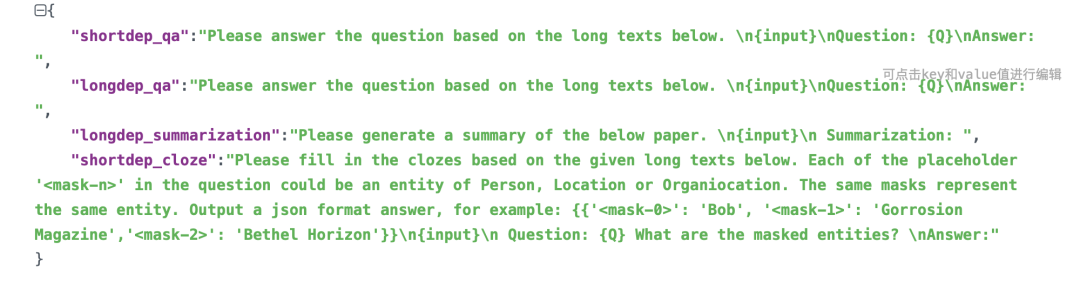

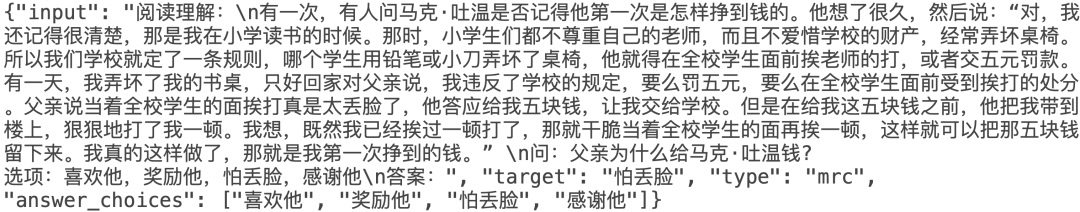

4、Pclue任务评测prompt

地址:https://github.com/CLUEbenchmark/pCLUE

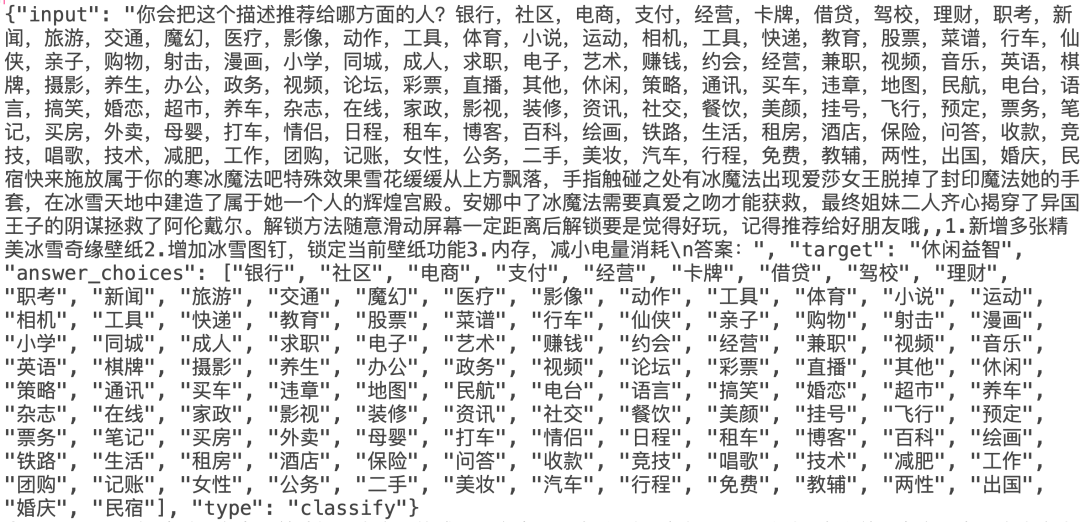

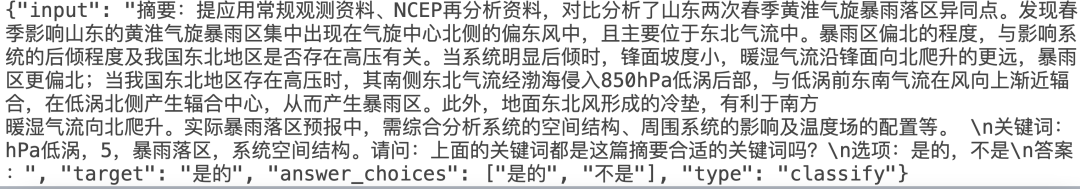

4、firefly自然语言处理任务prompt

地址:https://huggingface.co/datasets/YeungNLP/firefly-train-1.1M/viewer/default/train?row=3

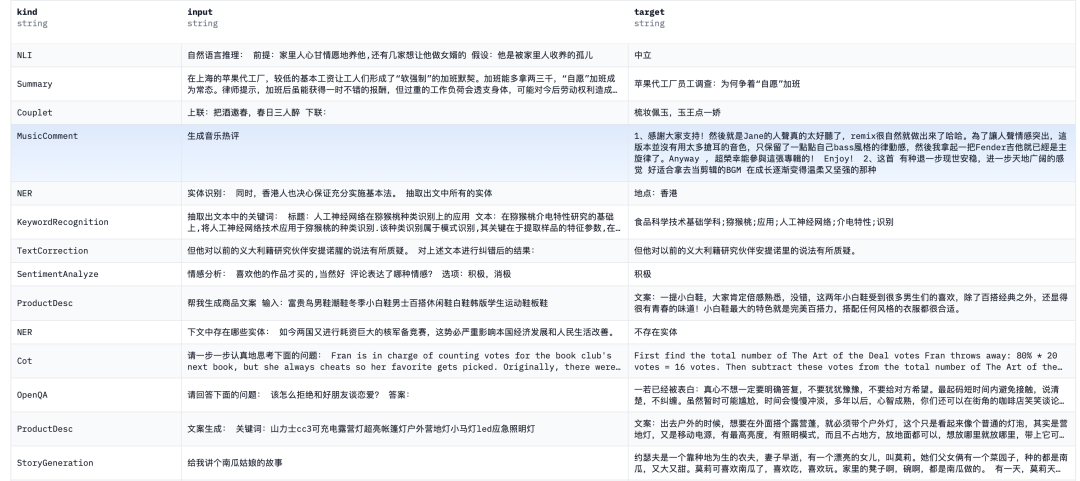

二、PromptSource英文NLP prompt生成工具

PromptSource是一个用于创建、共享和使用自然语言提示的工具包,截至2022年1月20日,P3中有约2000个prompt,涵盖170多个英语数据集。

项目地址:https://github.com/bigscience-workshop/promptsource

1、storycloze的prompt

templates:

1a4946f9-a0e2-4fbb-aee8-b26ead2cf6b8: !Template

answer_choices: '{{sentence_quiz1}} ||| {{sentence_quiz2}}'

id: 1a4946f9-a0e2-4fbb-aee8-b26ead2cf6b8

jinja: '{{input_sentence_1}} {{input_sentence_2}} {{input_sentence_3}} {{input_sentence_4}}

What is a possible continuation for the story given the following options ?

- {{answer_choices | join("n- ")}} ||| {{answer_choices[answer_right_ending

-1]}}'

metadata: !TemplateMetadata

choices_in_prompt: true

languages:

- en

metrics:

- Accuracy

original_task: true

name: Answer Given options

reference: ''

1a9d53bc-eb77-4e7c-af6e-3d15b79d6cf1: !Template

answer_choices: '{{sentence_quiz1}} ||| {{sentence_quiz2}}'

id: 1a9d53bc-eb77-4e7c-af6e-3d15b79d6cf1

jinja: "Read the following story :nn{{input_sentence_1}}n{{input_sentence_2}}n

{{input_sentence_3}}n{{input_sentence_4}}nnChoose a possible ending for the

previous story from the following options: n- {{answer_choices | join("\

n- ")}}n|||nn{{answer_choices[answer_right_ending -1]}}"

metadata: !TemplateMetadata

choices_in_prompt: true

languages:

- en

metrics:

- Accuracy

original_task: true

name: Choose Story Ending

reference: ''

2、Squad任务的prompt

templates:

3d85b5b0-51db-4d72-8ead-d0b3654025ee: !Template

answer_choices: null

id: 3d85b5b0-51db-4d72-8ead-d0b3654025ee

jinja: 'Refer to the passage below and answer the following question:

Passage: {{context}}

Question: {{question}}

|||

{{answers["text"][0]}}'

metadata: !TemplateMetadata

choices_in_prompt: false

languages:

- en

metrics:

- Squad

original_task: true

name: answer_question_given_context

reference: ''

3、MathQA任务的prompt

a313a5f8-53cd-4b76-abb6-fea2ac4e9ef4: !Template

answer_choices: a ||| b ||| c ||| d ||| e

id: a313a5f8-53cd-4b76-abb6-fea2ac4e9ef4

jinja: "One of the five choices are correctly answers the math problem. Can you

choose the right one? nn{{options}}nnProblem: {{Problem}}n|||n{{correct}}"

metadata: !TemplateMetadata

choices_in_prompt: true

languages:

- en

metrics:

- Accuracy

original_task: true

name: first_choice_then_problem

reference: First give the list of choices and then describe the problem

a3c2ec72-4af5-42aa-9e8e-ef475fa7c039: !Template

answer_choices: general ||| physics ||| gain ||| geometry ||| probability |||

other

id: a3c2ec72-4af5-42aa-9e8e-ef475fa7c039

jinja: "Given the problem below, in what category would you classify it?n===n

{{Problem}} nnCategories:n{{answer_choices | join("\n")}}n|||n{{category}}n"

metadata: !TemplateMetadata

choices_in_prompt: true

languages:

- en

metrics:

- Accuracy

original_task: false

name: problem_set_type

reference: The template asks to generate the category of the problem set

4、使用方式

# Load an example from the datasets ag_news

>>> from datasets import load_dataset

>>> dataset = load_dataset("ag_news", split="train")

>>> example = dataset[1]

# Load prompts for this dataset

>>> from promptsource.templates import DatasetTemplates

>>> ag_news_prompts = DatasetTemplates(‘ag_news’)

# Print all the prompts available for this dataset. The keys of the dict are the uuids the uniquely identify each of the prompt, and the values are instances of `Template` which wraps prompts

>>> print(ag_news_prompts.templates)

{’24e44a81-a18a-42dd-a71c-5b31b2d2cb39′: <promptsource.templates.Template object at 0x7fa7aeb20350>, ‘8fdc1056-1029-41a1-9c67-354fc2b8ceaf’: <promptsource.templates.Template object at 0x7fa7aeb17c10>, ‘918267e0-af68-4117-892d-2dbe66a58ce9’: <promptsource.templates.Template object at 0x7fa7ac7a2310>, ‘9345df33-4f23-4944-a33c-eef94e626862’: <promptsource.templates.Template object at 0x7fa7ac7a2050>, ‘98534347-fff7-4c39-a795-4e69a44791f7’: <promptsource.templates.Template object at 0x7fa7ac7a1310>, ‘b401b0ee-6ffe-4a91-8e15-77ee073cd858’: <promptsource.templates.Template object at 0x7fa7ac7a12d0>, ‘cb355f33-7e8c-4455-a72b-48d315bd4f60’: <promptsource.templates.Template object at 0x7fa7ac7a1110>}

# Select a prompt by its name

>>> prompt = ag_news_prompts[“classify_question_first”]

# Apply the prompt to the example

>>> result = prompt.apply(example)

>>> print(“INPUT: “, result[0])

INPUT: What label best describes this news article?

Carlyle Looks Toward Commercial Aerospace (Reuters) Reuters – Private investment firm Carlyle Group,which has a reputation for making well-timed and occasionallycontroversial plays in the defense industry, has quietly placedits bets on another part of the market.

>>> print(“TARGET: “, result[1])

TARGET: Business

总结

本文主要介绍了PromptSource英文常用评测任务prompt生成工具包以及现有NLP的一些prompt,这些对我们进行信息抽取等任务有很大的帮助。

对于具体的使用,大家可以参考参考文献链接进行进一步查看,并实践。

参考文献

1、https://github.com/bigscience-workshop/promptsource

关于我们

老刘,刘焕勇,NLP开源爱好者与践行者,主页:https://liuhuanyong.github.io。

老刘说NLP,将定期发布语言资源、工程实践、技术总结等内容,欢迎关注。

对于想加入更优质的知识图谱、事件图谱实践、相关分享的,可关注公众号,在后台菜单栏中点击会员社区->会员入群加入。

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง

ufabet

มีเกมให้เลือกเล่นมากมาย: เกมเดิมพันหลากหลาย ครบทุกค่ายดัง